The hype around artificial intelligence (AI) and data-driven solutions for managing the pandemic has met stiff resistance from those who are concerned about its lasting social impacts. While much of the media narrative labels those impacts simply as “data privacy concerns,” the issues go far beyond the concerns of unauthorized or overbroad use of data that this framing suggests. When quarantine enforcement algorithms are triggering police action, which communities are likely to disproportionately bear the brunt of these enhanced policing powers? If algorithms are deciding who might be able to go to work when the lockdown is over, what recourse do people have to question these systems if they get it wrong? While data protection laws can provide a solid foundation for concerns around data sharing and unauthorized surveillance, there are many questions around discrimination, profiling and accountability that could benefit from a framing that goes beyond privacy.

We grappled with this issue in our recent submission to the Canadian Office of the Privacy Commissioner (OPC) public consultation on whether (and how) the Personal Information Protection and Electronic Documents Act (PIPEDA) should be reformed to apply to AI technologies. Looking internationally, data protection or information privacy laws, in the countries that have them, are often the first port of call when searching for legal tools to hold AI systems accountable. In Europe, for example, provisions in the General Data Protection Regulation (GDPR) are being operationalized to bring more transparency and accountability to the use of AI. While data privacy law can and should be applied to AI, the data privacy lens is inherently limited in scope and, as a result, could fail to provide meaningful redress to the communities that are directly and disproportionately harmed by these technologies.

Data Protection Law as a Tool to Regulate AI

Some of the most common AI systems — facial recognition software and credit-scoring apps, for example —involve personally identifiable information (PII) at multiple stages, from training the AI model, to being part of the AI systems’ output. This use of personal data is the threshold condition for data protection laws to apply, which then set into motion a series of legal obligations on entities using AI.

First, there is the requirement of consent for the use of any personal information in an AI system, a cornerstone of the PIPEDA and other data protection laws. Consent isn’t always a meaningful safeguard, especially when people will be disadvantaged by the “choice” of opting out. But it can ensure transparency about how an individual’s data is being used. Take Clearview AI, for example, which was exposed for collecting people’s face data from social media without their knowledge and then processing the data with an app that was accessed by many public, private and law enforcement agencies. If Clearview had obtained consent, it would have been an opportunity for the public to scrutinize the system. Based on the lack of consent, several lawsuits have been filed against the company under the Illinois Biometric Privacy Act.

Second, principles of data minimization and purpose limitation can draw sharp lines to prohibit certain kinds of AI use, like such as biometric recognition. The Swedish data protection regulator, for example, recently banned facial recognition in schools, based on the principle of data minimization, which requires that the minimum amount of sensitive data should be collected to fulfill a defined purpose. Collecting facial data in schools that could be used for a number of unforeseen purposes, it ruled, was neither necessary nor a proportionate way to register attendance.

Third, requirements such as data protection impact assessments (DPIAs) can be used to evaluate and mitigate the risks from AI systems. Although DPIAs are typically viewed as mechanisms to ensure compliance to data protection law, the process opens up the door for reflection on the broader impacts of AI. As the UK Information Commissioner’s Office draft auditing framework explains, making sure that data processing is done “lawfully, fairly and in a transparent manner” (a requirement under the GDPR) means moving beyond data privacy to evaluating the risk of discrimination and bias, which have long plagued the use of AI.

The Limits of a Data Privacy Lens

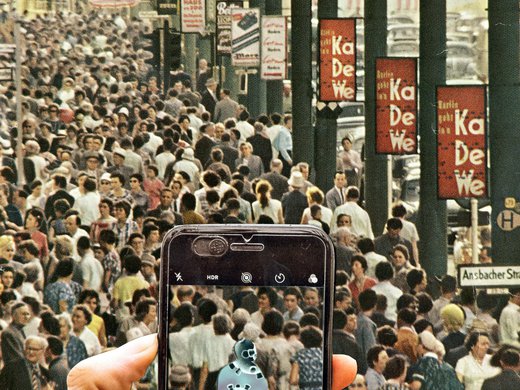

Despite these opportunities, looking at the AI regulation problem through the prism of data protection law can create its own blind spots. The distinction between personal and non-personal data often crumbles under the realities of how data is generated and applied in AI technologies. For one thing, apparently anonymous and discrete data categories are often combined (“re-identified”) to reveal personal information. More importantly, focusing on the individual identifiability of the data ignores how algorithmic profiling works, which is often through classification schemes or pattern recognition. Even though AI systems involve data that doesn’t explicitly pertain to humans at all (such as vehicle density data for AI used in so-called “smart city” projects, or data for AI sensors used in precision agriculture), they eventually shape decisions with real consequences on the lives and livelihoods of communities. The predictions and determination made by an AI system may have significant personal consequences, even as the data the system relies on appears mundane and non-personal.

In fact, this focus on individual actors and individual harms is ill-suited for how AI technologies operate in society. For instance, AI technologies applied in the context of allocating social services tend to implicate communities and groups, attempting to predict individual behavior based on group characteristics, or to target entire communities based on historical practices and policies. This is especially true for predictive policing technologies.

The individualistic approach of data privacy and protection policies fails to capture the full spectrum of harms produced by AI technologies, specifically discrimination, exclusion and hyper visibility. These harms often relate to existing power dynamics, so legal frameworks must shift the focus from threshold questions, like whether the data is personal, to questions about the outcomes produced or facilitated by AI, or about the impact of an AI-informed decision.

Shifting legal frameworks to focus on AI’s impact is also important for understanding the limitations of prevailing and prospective interventions. For example, while some think documentation requirements like DPIAs are a step in the right direction, these practices are not a silver bullet. They can help provide more information and context about a data set, a potential model and other components of AI technologies, but they cannot reveal (and can sometimes conceal) underlying systemic or institutional problems, such as discriminatory policies and corruption. Institutional problems may be reflected in a given data set but not acknowledged by subsequent users of the data, such as when corrupt policing practices skew crime data that is used to inform policing decisions or to develop AI policing technologies, like predictive policing.

AI Regulation Must Focus on Impact and Accountability

AI technologies have laid bare the limitations of existing data protection frameworks and the assumptions on which they have been built. Forward-looking regulatory proposals, such as algorithmic accountability frameworks, should not be constrained to a data privacy lens alone. Broader frameworks, such as accountability, can help address categories of harm and interests that are related, yet not specific to data processing.

For example, the Algorithmic Accountability Act of 2019, a proposed bill in the United States Congress, attempts to regulate AI with an accountability framework. This legislation requires companies to evaluate the privacy and security of consumer data as well as the social impact of their technology, and includes specific requirements to assess discrimination, bias, fairness and safety. Similarly, the Canadian Treasury Board’s 2019 Directive on Automated Decision Making requires federal government agencies to conduct an algorithmic impact assessment of any AI tools they use. The assessment process includes ongoing testing and monitoring for “unintended data biases and other factors that may unfairly impact the outcomes.”

An accountability lens will also be critical in the move toward frameworks that mandate civic participation and transparency. As recent high-profile smart city projects indicate, large-scale AI projects lead to an increasing consolidation of power in the hands of private companies to make decisions about civic life. The #BlockSidewalk campaign notes that Toronto’s Sidewalk Labs smart city project “is as much about privatization and corporate control as it is about privacy.” Policies around AI must, therefore, be responsive to this broader political economy, and focus on ensuring that those directly impacted have a meaningful say in whether these systems are used at all, and in whose interest.