This transcript was completed with the aid of computer voice recognition software. If you notice an error in this transcript, please let us know by contacting us here.

Joanna Bryson: People are almost defining AI as that which cannot be regulated. So some companies are trying to say that if there's not deep neural networks in it, it's not AI. Even if there's a deep neural network in it, you can still say, "Okay, so how was it developed? How was it tested? Which data did they use? What was it designed to do? How is the system architected that used deep networks in it? How are you monitoring it?" Every aspect of that is human activity and it can be logged and it can show whether you've done due diligence or not. So I think what we should be doing, let's not even argue about what AI is, let's just talk about how do you govern the manufacturing of software. And you can go through and account for whether it was done accurately and legitimately and with due diligence.

[MUSIC]

David Skok: Hello and welcome to Big Tech, a podcast about the emerging technologies that are reshaping democracy, the economy and society. I'm David Skok.

Taylor Owen: And I'm Taylor Owen. On this episode of the podcast, we're talking about artificial intelligence, ethics and the responsibilities of developers when building AI products and services.

David Skok: And of course the role of governments too, right Taylor?

Taylor Owen: Absolutely. Am I that predictable?

David Skok: Well, I say it jokingly but in all honesty, societies are already feeling the impact of AI and the question is how do governments regulate the technology without stifling the innovation that it can deliver?

Taylor Owen: Absolutely and increasingly AI is poised to bump up against a wide range of spaces that states traditionally have responsibility for, the digital economy, the employment market, policing and criminal sentencing, the protection of minority rights. All of these things are coming into the purview of governments and their relationship with AI. So how are we going to govern AI at the same time as the technology is barreling forward in industry, has become an urgent public policy issue.

David Skok: Without question. And so in this episode we speak with Joanna Bryson.

Taylor Owen: Joanna's current projects include accountability and transparency in the development of AI, technologies impact on economic and political behaviour and work on AI governance.

David Skok: She is beginning a new role as professor of ethics and technology at the Hertie School of Governance in Berlin. Hello Joanna, welcome to the show.

Joanna Bryson: Hi.

David Skok: I'm curious at a high level, if we could start with something pretty back, which is what is intelligence?

Joanna Bryson: Whoa. Okay. Yeah, that's a pretty start at the beginning. I actually have three favourite definitions of intelligence. So when I'm trying to talk about regulation, I use the one that I was first taught when I was an undergraduate and I've recently figured out, actually goes back to 1883. So it was actually a seminal book on animal behaviour and they needed to figure out how to compare intelligence between species. And so that one is just saying that you're more intelligent to the extent to which that you're more able to do the right thing at the right time. So another way of phrasing that is that you're converting, it's a computation, that you're converting the information you have about the current context into an action. And so that's why I really like it for the purpose of regulation is because if you're trying to explain to people about what is and isn't a threat of intelligence, in general and artificial intelligence and specific, then you make it clear that it's a physical process. It's not magic. It's not that one country will suddenly find an algorithm that will give them omniscience. That everything is a physical process that takes time, space, and energy to compute something including to compute an action.

David Skok: It's so interesting to hear your definition of intelligence and you're using a lot of engineering language. So what then is the definition of artificial intelligence?

Joanna Bryson: So artificial intelligence is anything that's an intelligent thing, that's an artifact. And again, that's really useful from the perspective of trying to do regulation, just to remind people that the AI is the I that we're responsible for, that somebody has made the deliberate choice to create. And sometimes people try to get out of that responsibility by putting in a random number generator or something and say, "Oh, it was autonomous because I built it this particular way." No, you built it that way and that was your decision. That's like if you shoot a gun into the air in a crowded place, then the bullet comes down and hit someone, you're still responsible for that action.

Taylor Owen: Right. But what happens when AI starts to do things, like original writing of news articles or stories, and if it comes very difficult to see the line between code and intelligence.

Joanna Bryson: The stereotypical, simplest case for the kind of intelligence that requires action generated from context is a thermostat. And apparently, the entire Chinese room argument, which a lot of philosophy students have to learn in their first class on AI, which was written by a philosopher named Searle, was written because that guy had been at a party with someone else. I've variously heard that was Marvin Minsky or that it was John McCarthy. But anyway, one of the first generation AI people, that argued that a thermostat was intelligent and the philosopher just flipped out and got really angry. Do you know about the Chinese room algorithm?

Taylor Owen: No. No. Can you explain that quickly?

Joanna Bryson: Yeah, sure. It's ideas that you have a room and there's a person inside the room who only speaks English, but the room has loads and loads of books. And then if you put a question into the room, you pass it old style, through in paper because this is such an old argument. And then the person looks through all the books on the walls and looks up all the characters and produces an answer and then passes it back out the other side. And so the room knows Chinese but the person doesn't. So this is supposed to be a proof that AI is impossible because you could make this huge system that can solve a problem and the person in the middle of it doesn't know what's going on. But that's just so ridiculous. That's same way our brains work. It's not like our individual brain cells know English either. So the idea that one component of an intelligence system is not the entire intelligence system, is well obvious, basically, and it doesn't disprove anything.

David Skok: So your research on the fundamental dynamics of human psychology and also in animal behaviour has given you an interesting perspective on how we make use of technology. And I'm curious if you can tell us a bit about how looking at the natural world can help us understand our relationship with technology today.

Joanna Bryson: Right. Well, I don't know if I can easily answer the second part of that question, but let me go back to your first part. I do think that right from the beginning, because I had a good education in behavioural science, so I had learned how intelligence really works in animals and people. And so then when I took my AI courses, I took one, well I took one at Edinburgh and then I was at MIT and I was tutoring the undergraduate AI for Patrick Winston, it was pretty cool. But anyway, a lot of students that come at the beginning of the course and they would say, "Oh boy, Oh boy, I'm going to learn about AI." At the end of the course they said, "Oh, that's not intelligence." And I didn't have that response. So I think one of the big advantages I had coming into this was that I knew what human and animal intelligence were like, that they are limited that we confabulate our memories, that we don't have perfect access to everything that we feel. That there's implicit and explicit knowledge, that became a really big deal a few years ago when we had our paper come out about AI replicating implicit bias.

Taylor Owen: Can you explain a bit about the differences there?

Joanna Bryson: Oh yeah, sure. It's both trivial and completely difficult, but I'll go ahead and make a try. Explicit knowledge in humans, it's the stuff you can tell you. So I could tell you what I had for breakfast this morning or for lunch, there's things I can remember. So there's things that we can talk about and then there's other things we can't talk about, like how do I catch a ball? I don't remember where I learned to do that. And I certainly can't tell you which muscles I change, but in some sense my being knows that, there's some part of me that knows that. So the implicit biases are measured by seeing how quickly you can push a button. If you have one button that's for either men's names or math skills and another button that's either women's names or reading skills and you have to look and see which button to push when a bunch of words are flashed in front of you. Because our culture tells us that more often our culture associates, men with math and women with reading that task is easier than if you had to do it the other way around. If you had to both associate women's names and math skills and men's names and writing skills. So explicitly, you might say, "Of course women and men are equally likely to be good at math or good at reading." But you implicitly are showing that you have expectations that are derived from what you experience or gets expressed in your culture a lot. The explicit mind and the implicit mind have different jobs to do. And I think that was one of the cool things that came out of really teasing apart these two pieces. And so the thing I was expecting, it was my idea in the first place, was to check whether that when you do large corpus linguistics, which is the way that you gets semantics done for the web, so how web search works. So you just do a simple thing with counting words and seeing where they appear and you figure out they mean similar things and that's how computers know what words mean. So I just, as soon as I found out about the implicit association test, I said, "Oh, I bet that would be replicated by AI with large Corpus linguistics." And actually the researcher that was giving the talk said, "No, you don't understand. This is about how evil people are or whatever." And it's like, "Okay." So I never really had a natural language program, but I was running a few tests on this and getting some pretty good evidence with undergraduates. And then when I came to Princeton, I found people that were much more expert than I was in contemporary word embedding technology. And so we really ran the careful test and we found it everywhere. So that was my idea, let's just see if it's there and it was. But one of my coauthors, Arvind Narayanan said, "Well wait, this stuff is pretty weird. I want to see how this relates to the real world." And it turns out that the biases that are in the word embeddings, so the bias that's in the AI that is the same as the implicit bias that was recorded by the psychologists in humans, that turns out to be incredibly well correlated with the actual world. So like these terrible sexist word embeddings that think that women are unlikely to be programmers, but nurses are quite likely to be women, those word embeddings are pretty accurate when you compare them to the US labor statistics. So what that tells us is that our implicit biases derived from our lived experience and that our explicit, what's the explicit for, the explicit is where we're actually making new and better plans. So that's why it makes sense that we say, "Okay, we're not going to be sexist and assume that a woman can't be a good programmer." Hopefully when someone walks through the door say, "Oh, you're the wrong gender." But rather we can say, "Let's try to work hard and make sure that everybody has an equal shot on this." And not let the history determine the future. Does that make sense?

David Skok: It does. And what I'm finding fascinating about what you're talking about is that the word choices, the implicit or explicit, these are things that as a journalist, we think about, or that I think about a lot, but I don't articulate often. It's implied, if you will. So defining AI is one part of it, but I'm curious, you've said previously when people worry about AI, that they worry about the wrong things. So what should we be worrying about when it comes to artificial intelligence?

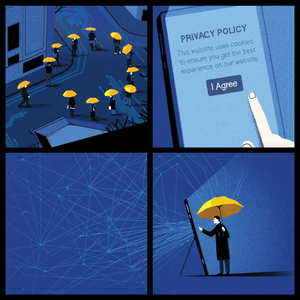

Joanna Bryson: Oh, I think I said that almost a decade or so, maybe two decades ago, in my webpage about AI ethics. And now it's almost gotten to the point where AI is so pervasive that asking what we should worry about with respect to AI, it's really like asking what we should worry about with respect to our culture and our society. So I think the biggest thing that's new is that we can predict the behaviour of each other so well. It used to be we would talk about like, "Oh you need to practice data hygiene and be careful not to leak too much information about yourself." The point is that so many people are willing to give up their data in exchange for whatever services and things, that even if there is someone who didn't have any digital devices, never bled any data themselves, we've learned so much about people that now we can make pretty accurate predictions. And again, this is hugely controversial, but you can make surprisingly accurate predictions from things like video, like facial capture, all these kinds of things, all these technologies now. So I think we now have to defend the data space of people the same way that we defend the private property of people. So it's not that the police or the army or whatever couldn't take your private property, it's that society would be too disrupted if they did that. If you come home and someone's taken your stuff, there's things that you can do. There's people you can report that to and you have a pretty good hope that most people want society to be stable and safe for everyone. So the data space has gotten like that now too. We have to somehow be able to detect if people are using data inappropriately and then we have to be able to penalize those people.

David Skok: So do you think enough people understand what it is yet? I mean, when you say that, it strikes me that the warning systems need to be in place so people understand the ramifications and I'm not convinced that they do yet.

Joanna Bryson: When it was like, "Oh, you haven't practiced good enough data hygiene." Well then you're actually saying everyone has to know enough to be able to protect themselves. But mostly liberal democracy, although it is focused on individual empowerment, it also knows that you're never going to get everyone to understand things. What you need is to have the mechanisms in place and then there's some interaction between concerned citizens and NGOs and police, proactive policing, the FBI, whatever. There's all sorts of people that can then be watching over this. I mean, the European Union has done this with their general data protection regulation, the GDPR. I really heard a very interesting debate once at the American Political Science Association meeting, I guess a couple of years ago, and somebody asked from the audience, "What do you think of the GDPR?" And it was a bunch of American cybersecurity experts and a couple said, "Oh, it's Europe deciding that China was right." Because China had built that great firewall. And then a bunch of the other ones corrected them and said, "No, no, that's not true at all." What they've realized is that their data is an extension of their citizens. And so since it's the job of a nation to defend, actually not only its citizens but everybody within its borders, that's something that's been established in the last century, that nations are responsible for the human rights of any human inside of their borders. Since the wellbeing can be affected by people knowing information about them, having access to their private data, then the nations also have to defend that private data. I think of it as being like the airspace. Just like you can't defend your country if you don't block bombers from flying over it and similarly you can't defend your citizens if you allow anybody to just harvest their data and then feed them disinformation.

Taylor Owen: Isn't there something fundamentally collectivist about AI though, in the aggregate, where focusing on the individual too much and individual rights might lose where the potential danger is and power is, which is receiving all of this and making decisions and collectives.

Joanna Bryson: That's really interesting and I think we need to untangle a little bit more of what you've said, but let me agree with you in two totally radically weirdly different ways. And one of them is Chinese colleagues. So one of the weird things that's happened to me in the last year was, I'm a British citizen as well as an American citizen, and Theresa May's government wanted us to be special AI buddies, including AI ethics buddies with China. And so I wound up in a meeting between British and Chinese experts on AI ethics. And the Chinese experts were saying, "What is it about all these Western principles? They're only defending the individual and they're not defending the collective. Because of course the individual matters, but if there's no collective to defend the individual, why aren't you ever talking about the rights of the collective?" And my answer was that, well, as I understood it, that at least in the 20th century, you were more likely to be killed by your own country than by somebody else's. So that was why people were worried about that in Europe.

Taylor Owen: Well also a liberal economic model too, right? That Preferentialize.

Joanna Bryson: Yeah. But the focusing on individual agency, which individuals want to believe they have agency, as a means of deception so that we don't look at the larger actors and we don't look at the real causes. So I think this happens not only at the level of blaming people for bleeding too much data like, "Oh you used a credit card, okay, well then you get whatever you deserve." But also then you see people saying, "Oh we need to hold programmers accountable like we would hold doctors accountable." Well programmers, I mean there are people out there where it's like one or two programmers have put together the whole app and it's really them. Even Oculus Rift, I think when it was purchased was like 12 people. But quite a lot of programmers do not have that much agency. They're part of a much larger organization. Arguably some of the social media companies, and I don't want to defend any particular company because I don't know for sure, I think it's a matter of a discoverable matter of fact what they were thinking. But some of them really were out just to make a toy and then hadn't recognized that they suddenly had created this incredibly powerful political structure. And so the question is, at what point does that become negligent? But anyway, it may be that the individual programmers don't realize what they're doing and don't have a way of finding out what they're doing and there's some evil genius in a backroom that does. Or it could also be that nobody has been paying attention to the actual consequences and could have known the actual consequences of creating new communication technologies. But now that we know more, their obligations have changed. And as I understand it, and again, I'm not a corporate law person, but as I understand it, as you dominate a market or as you get more wealth or more success, you just do have more obligations and then you need to level up. You need to level up your corporate oversight.

Taylor Owen: Yeah. And that points to some of the downside risks of this technology being political and economic. And that's something we're debating actively in Canada right now, that as the companies that are deploying and building the technology are both deploying them in Canada but also building them in Canada. So we have this huge push for foreign direct investment in Canada, of the big tech companies who are setting up huge AI labs. But most of the economic benefit of that technology is leaving the country and we're really struggling at the same time to govern how it's going to be deployed. That's a political challenge, more than anything.

Joanna Bryson: That's a huge political challenge and that doesn't reduce it. That goes back to what I was saying before about, I'm not sure that these are just AI ethics issues, that some of them are really about transnational governance and I don't think it's only about AI. So I suspect the same things are happening with pharmaceuticals and petrochemicals. All kinds of companies have natural catchment areas that are greater than the boundaries of a reasonably sized geographic entity to govern. So government is traditionally the part of our economy, of our society that we've given the task of redistribution to. The job of government is to take collective resources and solve collective infrastructural problems. And so when you don't see adequate redistribution, then that means there's some a failure of government. But that made more sense if the companies operate within the zone of a single government. I think the really super important thing about the GDPR is that the way the EU works is that there isn't one set of laws or one nation, one army. The countries that make up the EU are the ones that have the laws and the ones that have the military and the ones that have the police and whatever. What they call it is harmonizing. They harmonize their laws sufficiently, that they get the power of a much larger entity and then they can say, "Okay, if you want to do business with any of us, then you have to meet these set of criteria." And at first big tech fought really hard against that. And then when it actually had all gone through, everyone wanted to do business in Europe, so they followed law and then they realized, at least big tech said, "Hey, wow, there's a single API to 28 countries, this is great." So they had fought against something that actually government is not an inhibition. Government is mostly a way to make it easier to do business in a more stable and clear environment. And I think one of the things that tech companies haven't got their head around is that it's to their advantage to help government do its job. And that includes paying taxes, but it also includes giving adequate information so that for example, tech companies don't have to police other tech companies. If they think that there's a race to the bottom, they can just report, don't reach the bottom that way.

David Skok: Right. And that resistance to GDPR all of a sudden shifted. I was in San Francisco a couple of months ago and every tech company that I spoke to there, the first thing that came out of their mouth was that they were GDPR compliant. It was almost like the old Intel branding, the Intel inside, every company wanted to brand themselves as being GDPR compliant because it was more uniform. But let's consider for a second that regulation has been solved and talk about how AI could impact the job market. I recall an Oxford Institute survey several years ago that showed 47% of all jobs in the United States were at risk of automation. And at the time it caused quite an uproar, I think it's may still. Combining that stat, the fear of automation, along with the rise of Donald Trump and populism and nationalism, I'm curious from your perspective how correlated are these two things, being inequality and polarization?

Joanna Bryson: Yeah, yeah. So actually, I think there's two radically different parts of that question. One is where does polarization and populism come from, which is not necessarily the same thing either. And then the other is does AI actually eradicate jobs? And so let me deal with that second one first. This thing about, will robots take all the jobs. First of all, no robots don't do anything. What you're really talking about is, will large companies fully automate parts of their business process? Will companies decide that they want fewer people? And what we can see historically when that happens, I mean I'm old enough, I remember the PC revolution, what would happen is you'd have a small company, have three secretaries and then when they got the PCs all the secretaries were more productive and they would fire the less productive secretaries and just keep the one or two best secretaries because they could then do more stuff. And so you would think, "Oh well what happened to all those secretaries?" Well, more companies were founded because they could do more with fewer people and so you could have more companies. It's the same thing with famously the automated teller machines, also came in the 1980s and at least in 2015 there were actually more bank tellers than there had been before the invention of automated teller machines and they were actually getting paid more too. And the reason is because having the automated teller machines take care of most of the things that you needed from a bank, allowed the banks to have more smaller branches that were out closer to the people that wanted to use them, that's the customers. By making the branches cheaper, you actually wound up employing more tellers. Basically. There's nothing about AI that unemploys people. If I wrote a program that suddenly made teachers twice as effective, there's nothing in saying that that would say whether kids would get twice as good an education or we, tax payers only have to pay for half as many teachers. That's a political decision too. So it's not that AI gets rid of jobs and in fact historically it's increased jobs. However, there is this problem as I think I mentioned earlier, that basically when you have high levels of inequality, government is failing you, that something isn't happening. And what we've seen with populism is that we do tend to see the populous movements do better in regions with very high inequality and in a way that makes sense. In those regions that had the high inequality, both the rich and the poor feel threatened. They realize that there's something really going wrong and they fall back into some kind of identity politics.

Taylor Owen: Coming back to the AI question and I guess if the real concern here is the way in which the technology could be deployed, if we're saying there's a political and economic cost or potential consequences to the deployment. How do you see the conversation about holding the companies accountable, who increasingly have the monopoly over that technology and capacity?

Joanna Bryson: Yeah, so again, that's why I talked about the GDPR thing because it's a demonstration that you can do that. A lot of people seem to be afraid to do that and you just have to wonder how big of a crisis there has to be before people really act.

Taylor Owen: There's a big debate in Canada about whether we should continue on with the integration, particularly with the flows of data and the economic corporate integration with American tech firms or join with the EU in a new regulatory model. What would you advise on that and particularly in the AI space, should Trudeau be partnering with Macron and the EU and extend GDPR provisions to Canada or continue down this path of integration with US?

Joanna Bryson: Well, I wouldn't want to get into a false dichotomy. I think that it's worth trying to do both. I personally think that the EU is the best example we've got so far. More generally that whoever would do this, and I'd love to see Canada working on this both within whatever NAFTA is currently called and the EU is, just communicating to regulators how to regulate technology. And so one of the things I found really useful lately is people are almost defining AI as that which cannot be regulated. So some companies are trying to say that if there's not deep neural networks in it, it's not AI. And so there's two problems there. One is even if there's a deep neural network in it, you can still say, "Okay, so how was it developed? How was it tested? Which data did they use? What was it designed to do? How is the system architected that used deep networks in it? How are you monitoring it?" There's a whole lot, every aspect of that is human activity and it can be logged, you can show whether you've done due diligence or not. So nobody really cares what are the little weights are doing. It's like worrying about like what all the synapse are doing inside of a brain, you just don't need to know that in order to tell whether or not somebody has done the right or wrong thing. And similarly we can tell whether the corporations, we need to be able to tell, were the corporations knowingly doing the right or wrong thing, when they train the networks up or when they ran things, were they adequately informed? Maybe they just were naive, but they shouldn't be naive anymore because things have moved on and there's corporate standards that they should have been aware of when they pass some threshold of incorporation or of wealth. When I go and talk to governments, it's like it's news to them. They don't know that there's software's written down because people have been spewing disinformation about it then. And I said, "So first of all it's not true that you can't regulate deep learning, but secondly, it's ridiculous. The definition that I gave you of AI was nothing like that." And I think that's because some of the big companies like Google, they're trying to say, "Oh we didn't use to use AI until two years ago." It's like, no, they've always been an AI company, but they're just trying to make sure that these new regulations don't, in any way, affect their core business, their search engine or their ad tech or whatever. So I think what we should be doing, it's like, let's not even argue about what AI is. Let's just talk about how do you govern the manufacturing of software. And you can go through and account for whether it was done accurately and legitimately and with due diligence.

David Skok: It's more just a clarification, I'm trying to understand what you're saying, which is, don't focus on regulating the outcomes, focus on regulating the inputs. And how the sausage is made, is actually more important in putting guardrails up than a grand declaration of around what the outcomes should or shouldn't be?

Joanna Bryson: Well, no, I wouldn't say that, both are important. So it's when you see that there's a problem with the outcomes, that's what flags up that, because we don't have time to check everything that has happened as this happens. Although maybe with AI that'll change, I don't think so. Although we might catch a few more things before it gets out, but if there's something that gets out that we didn't anticipate, we need to be able to go back then and say, "Okay, did you file a good process?" A car manufacturer, they're always recording what they did because they do a phenomenally dangerous and possibly hazard thing we shouldn't maybe have ever legalized. But anyway, we do have now these private internal combustion engine things and they're running around and if one of them goes wrong and the brakes don't work, we can go back and say, "Why did the brakes not work?" And figure out whose fault is and we can say, "Okay, you've got to do this recall. You've got to pay this liability, whatever." It's the same thing with software. It should be the same thing with software. And most of the software industry does keep control of things like revision control logs and things like that, for its own benefit, so that they can figure out why their software works and so that they can debug it. Unfortunately, AI companies, from what I've heard, and I haven't done these audits myself, but from what I've heard, a lot of AI companies do not follow a good software engineering practice, and so that indicates even the people within the companies don't think of themselves as doing ordinary software engineering. And maybe that's because they've come out of, I don't know, political science or statistics or something and they don't get taught that as undergraduates. But it may be just this mystification anthropomorphism that's around AI, that makes people forget that they need to follow good engineering practices. But they do.

David Skok: We've spent a lot of time, most of our time talking about the perils and the dangers and how we can protect ourselves and humanity. I suppose to end, I'm just curious, what are you hopeful about with the technology? What are the things that you look at and go, "This can really be a remarkable thing and I'm so optimistic about what we can achieve."

Joanna Bryson: I think we can lose sight of this, but again, as somebody with a decent memory that's getting old, I just can't believe how empowered we all are. Just the fact that you can go into a town you don't know and find the good restaurants. I mean, not even good, find the kinds of restaurants that you would like. I mean, one thing I got very excited about was when somebody was talking about live real time translation, that the hope that everybody should be able to speak their native language in all kinds of contexts, artistic or scientific or political. It would be so nice to be able to put us on a more level playing ground that way. So yeah, no, I mean technology is one of the ways by which we're solving lots of problems. Oh, and another thing is we're now able to perceive damage that we're doing to the biosphere and hopefully having that strong evidence, if we can overcome some of the political problems we talked about before, will help us come to solutions. I guess I have a lot of hope that we keep making ourselves smarter and able to perceive more. And I hope that we're also going to be focusing on individual lives and helping people find individual actualizations. So the potential is all out there. I think the most fundamental problem is making sure that we do focus resources on the right kinds of problems, so that is governance and regulation and that's why I'm moving to a governance school. It's because I think that's the biggest problem right now.

David Skok: So bringing humanity to engineering and bringing engineering to humanity, is one way we can close this gap. Joanna, it's been terrific to talk to you. Thank you so much for taking the time.

Joanna Bryson: Well thank you.

[MUSIC]

Taylor Owen: So it was great hear from Joanna and to get her take on how to address some of these issues around AI and coding.

David Skok: Yeah, and it's come up in a few of our other episodes too, that we know there were real world impacts to the coding and development that is done. Whether that's in advertising systems or military equipment and the implicit bias that comes with that.

Taylor Owen: Yeah, absolutely. I mean, just a few years ago, I think these AI systems were seen largely as neutral technologies. As things that would be deployed in society and bring more fairness to the decisions that were being made with them. But we know pretty clearly now that that's not the case. AI is embedded with the biases and subjectivities of the data that it's built on. So we know everything from the use of AI for police targeting or for criminal sentencing or for determining insurance rates, are all deeply biased because the data we have about society is biased.

David Skok: Yeah, the coding is only as good as the humans who code it.

Taylor Owen: Absolutely. And the way the data was collected on top of it. So it's a very complicated issue that governments all of a sudden see a real urgency in. They know that this technology is evolving so quickly and it's getting out of their grasp. And it's people like Joanna that are bringing this conversation about ethics and bias and transparency and accountability, right into these conversations the governments are having.

David Skok: We talk about governments and now just coming out of the heels of the World Economic Forum, there was a lot of talk about corporate social responsibility and sustainability. How far does that go into the bowels of an organization and its development? At what point can you hold a tech company responsible for its social responsibility and its coding?

Taylor Owen: Well, I mean we hold companies responsible for their behaviours all the time. I don't see why it would be different with AI. It's more complicated because it's a different type of technology and it's, by definition, outside of the bounds of human knowledge, it's machines making decisions, not humans. But at some point our society is based on holding humans accountable. Whether they be people in charge of companies or in charge of militaries or in charge of police departments and how they use the tool of AI is a point on which they will increasingly be held accountable.

David Skok: My pushback on that is that the challenge that you have of, okay, how do you do that without stifling innovation. At the end of the day, nobody wants to decrease productivity and decrease the innovation that happens in these spaces or do they?

Taylor Owen: Well, I mean there's a real question of whether AI does increase productivity or not. If it's wiping out job markets and enriching potentially monopolistic companies, is that making an economy more dynamic? It's not always doing that, but it has the potential to. And you're increasingly seeing the leaders of some of these large global tech companies calling for more regulation. They want the rules of the road and the guardrails put in place so that when they build tools, they're doing so within a legal structure that they know is in the bounds of the legal system they function in. So the CEO of Google has just called, for example, to ban facial recognition technology until we figure out how to govern it. So I think this dichotomy between innovation and deregulation or lack of regulation, it's a bit of a straw man in this case.

David Skok: Well, before we open up this can of worms into another episode, just to mention that Joanna herself talked about this in our conversation when she said, if you build an AI teacher, does it mean you need less teachers? And her argument is no, and that that relies on politics. We would love to get your thoughts on this issue and all of the podcasts that we've had so far. Let us know how you feel about these issues on Twitter using the hashtag #BigTechPodcast.

Taylor Owen: Thanks for listening. We wish Joanna Bryson success in her new role as a professor of ethics and technology at the Hertie School of Governance.

David Skok: I'm David Skok, Editor-in-Chief of The Logic.

Taylor Owen: And I'm Taylor Owen, CG senior fellow and professor at the Max Bell School of Public Policy at McGill. Bye for now.

[MUSIC]

Narrator: The Big Tech podcast is a partnership between the Center for International Governance Innovation, CIGI, and The Logic. CIGI is a Canadian non-partisan think tank focused on international governance, economy and law. The Logic is an award-winning digital publication reporting on the innovation economy. Big Tech is produced and edited by Trevor Hunsberger and Kate Rowswell is our story producer. Visit www.bigtechpodcast.com for more information about the show.